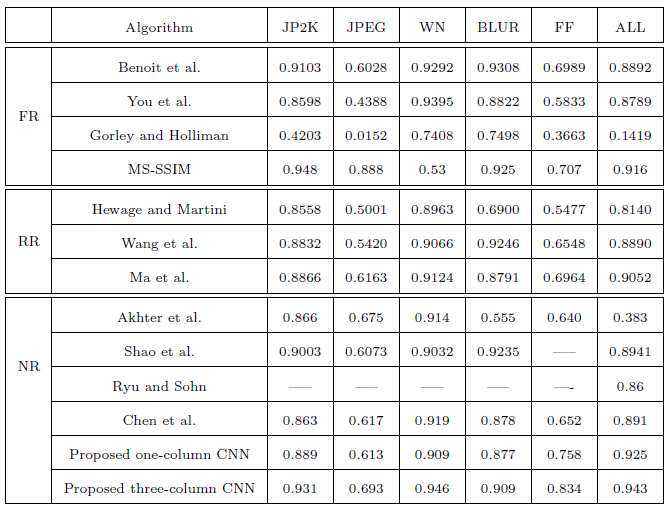

| Benoit et al. |

A. Benoit, P. Le Callet, P. Campisi, and R. Cousseau, "Quality assessment of stereoscopic images", EURASIP Journal on Image and Video Processing, 2009. [ Full Text] |

| You et al. |

J. You, L. Xing, A. Perkis, and X. Wang, "Perceptual quality assessment for stereoscopic images based on 2D image quality metrics and disparity analysis", International Workshop on Video Processing and Quality Metrics for Consumer Electronics, 2010. [ Full Text] |

| Gorley and Holliman. |

P. Gorley and N. Holliman, "Stereoscopic image quality metrics and compression", Proc. SPIE, 2008. [ Full Text] |

| MS-SSIM |

M. J. Chen, D. K. Su, C. C. Kwon, L. K. Cormack, and A. C. Bovik, "Full-reference quality assessment of stereopairs accounting for rivalry", Proc. Asilomar Conference on Signals, Systems and Computers, 2012. [ Full Text] |

| Hewage and Martini |

C. Hewage, S. T. Worrall, S. Dogan, and A. M. Kondoz, "Prediction of stereoscopic video quality using objective quality models of 2-D video", Electronics Letters, 2008. [ Full Text] |

| Wang et al. |

Z. Wang, E. P. Simoncelli, and A. C. Bovik, "Multiscale structural similarity for image quality assessment", Proc. Asilomar Conference on Signals, Systems, and Computers, 2003. [ Full Text] |

| Ma et al. |

L. Ma, X. Wang, Q. Liu, and K. N. Ngan, "Reorganized DCT-based image representation for reduced reference stereoscopic image quality assessment", Neurocomputing, 2016. [accept] |

| Akhter et al. |

R. Akhter, J. Baltes, Z. M. Parvez Sazzad, and Y. Horita, "No reference stereoscopic image quality assessment", Proc. SPIE, 2010. [ Full Text] |

| Shao et al. |

F. Shao, W. Lin, S. Wang, G. Jiang, and M. Yu, "Blind image quality assessment for stereoscopic images using binocular guided quality lookup and visual codebook", IEEE Transactions on Broadcasting, 2015. [ Full Text] |

| Ryu and Sohn |

S. Ryu, D. H. Kim, and K. Sohn, "Stereoscopic image quality metric based on binocular perception model", ICIP, 2012. [ Full Text] |

| Chen et al. |

M. J. Chen, L. K. Cormack, A. C. Bovik, "No-reference quality assessment of natural stereopairs", TIP, 2013. [ Full Text] |

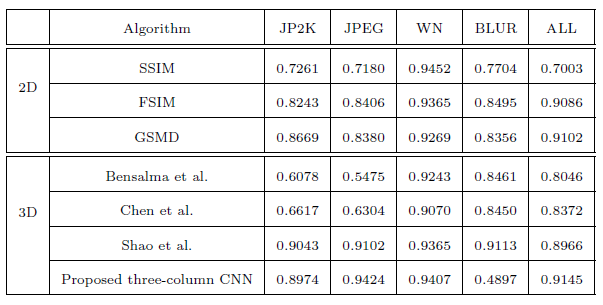

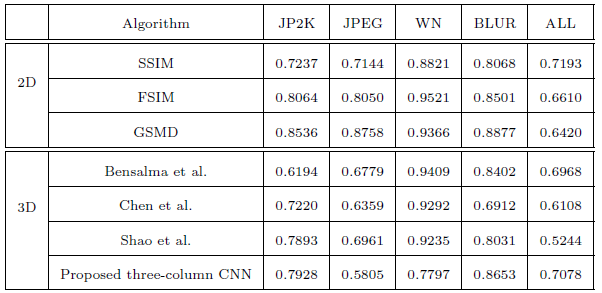

| SSIM |

Z. Wang, A. C. Bovik, H. R. Sheikh, and E. P. Simoncelli, "Image quality assessment: From error visibility to structural similarity", TIP, 2004. [ Full Text] |

| FSIM |

L. Zhang, D. Zhang, X. Mou, and D. Zhang, "FSIM: A feature similarity index for image quality assessment", TIP, 2011. [ Full Text] |

| GSMD |

W. Xue, L. Zhang, X. Mou, and A. C. Bovik, "Gradient magnitude similarity deviation: A highly efficient perceptual image quality index", TIP, 2014. [ Full Text] |

| Bensalma et al. |

R. Bensalma and M.-C. Larabi, "A perceptual metric for stereoscopic image quality assessment based on the binocular energy", Multidimensional Systems and Signal Processing, 2013. [ Full Text] |

| Chen et al. |

M. J. Chen, D. K. Su, C. C. Kwon, L. K. Cormack, and A. C. Bovik, "Full-reference quality assessment of stereopairs accounting for rivalry", Proc. Asilomar Conference on Signals, Systems and Computers, 2012. [ Full Text] |

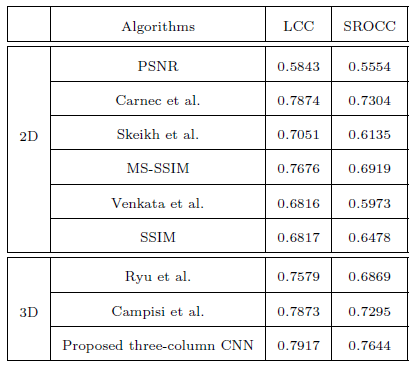

| Carnec et al. |

M. Carnec, P. Le Callet, and D. Barba, "An image quality assessment method based on perception of structural information", IEEE International Conference on Image Processing, 2003. [ Full Text] |

| Skeikh et al. |

H. R. Sheikh, A. C. Bovik, and G. de Veciana, "An information idelity criterion for image quality assessment using natural scene statistics", TIP, 2005. [ Full Text] |

| Venkata et al. |

N. Damera-Venkata, T. D. Kite, W. S. Geisler, B. L. Evans, and A. C. Bovik, "Image quality assessment based on a degradation model", TIP, 2000. [ Full Text] |

| Ryu et al. |

S. Ryu, D. H. Kim, and K. Sohn, "Stereoscopic image quality metric based on binocular perception model", IEEE International Conference on Image Processing, 2012. [ Full Text] |

| Campisi et al. |

P. Campisi, P. Le Callet, and E. Marini, "Stereoscopic images quality assessment", European Signal Processing Conference, 2007. [ Full Text] |